Contents

Hardware Index

My Other Computer is a Racecar

Experiments in building computers in very "not recommended" configurations.

Written 2021-02-17 3365 words.

Every project starts with a bad idea

Its early September 2019, and I have just come home from spending three months overseas living with my girlfriend. I spent an embarrassing amount of that time playing Factorio with her. Factorio is a wonderful game, not only for its engaging problem solving based sandbox gameplay and endless community mods, allowing you to turn it into a unholy grind of a hyper realistic industry simulator., It is also one of the few games that could actually run on the laptop I took with me. My laptop is no slouch however, its a top of the line Thinkpad from only a couple of years ago (It's still in warranty), but I realised while trying to do development on it over the summer, that I really missed my desktop; not for the horsepower however, but the generally improved quality of life experience of having a "full size" computer. I came home with two ideas stuck in my head:

- I don't really play 3D games any more.

- I really would like a desktop computer that could fit in my hand luggage.

I realised that my current computer, a full tower monster with twin RX Vega GPU's was such a bad fit for how I actually used my desktop. My computer spent most of it's life bored, running firefox, VSCode, and KiCad. Why did I need a 1000W gaming monster boiling my room day and night for that? It was time to build a new PC. This saddened me a little as to meet my new portable, low power, dream I would have to give up my Vegas. To fund the new machine I would have to sell the old one. Has anyone mentioned CPUs depreciate like bricks?

If it wasn't for the stupid "T2" chip apple installed in the 2018 Mac Mini this would be a very short blog post. The at the time new release was very tempting, offering 6 cores and up to 32GB of user upgradable ram. But that bloody T2 prevents the running of Linux, well if you want to use the internal SSD. I consider storage in a computer essential, so Apple are still a long way from ever getting any money from me. It was time to look at other options. So I outlined a list of requirements:

- Minimum of 32GB of ram and 8 cores.

- Small enough to fit in My rucksack.

- 10Gb Ethernet

- No laptop CPU's

The requirement for an 8 core CPU came from my existing desktop. At the time I was running an Intel i7 5930K that had cost me my firstborn child less than 3 years prior. Already I was unhappy how its Haswell architecture was ageing, and laptop CPU's were starting to beat it in multithreaded benchmarks. As mentioned earlier I don't really game. When I put my Computer to work its CPU heavy tasks like compiling my huge Rust code bases, or sometimes running some sort of simulation or non accelerated render task. So 8 cores was a must. Ryzen 3000 had just launched and that looked like the best option. However it locked me into needing a video card in the system. But I also needed 10Gbit ethernet, naturally not a single ITX motherboard for x570 had this feature.

I needed two add in cards, in an ITX system! :shocked:

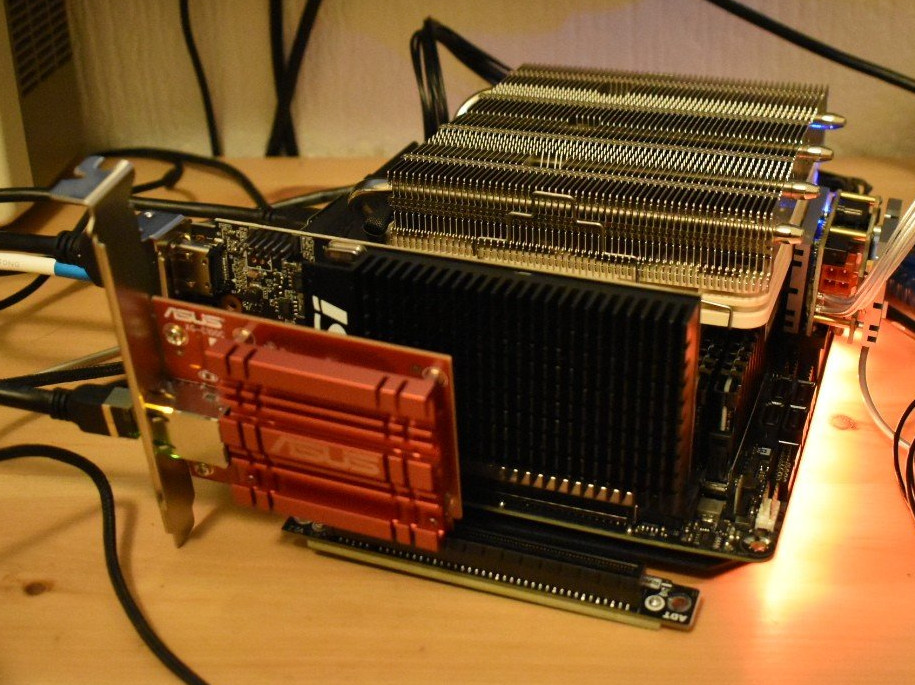

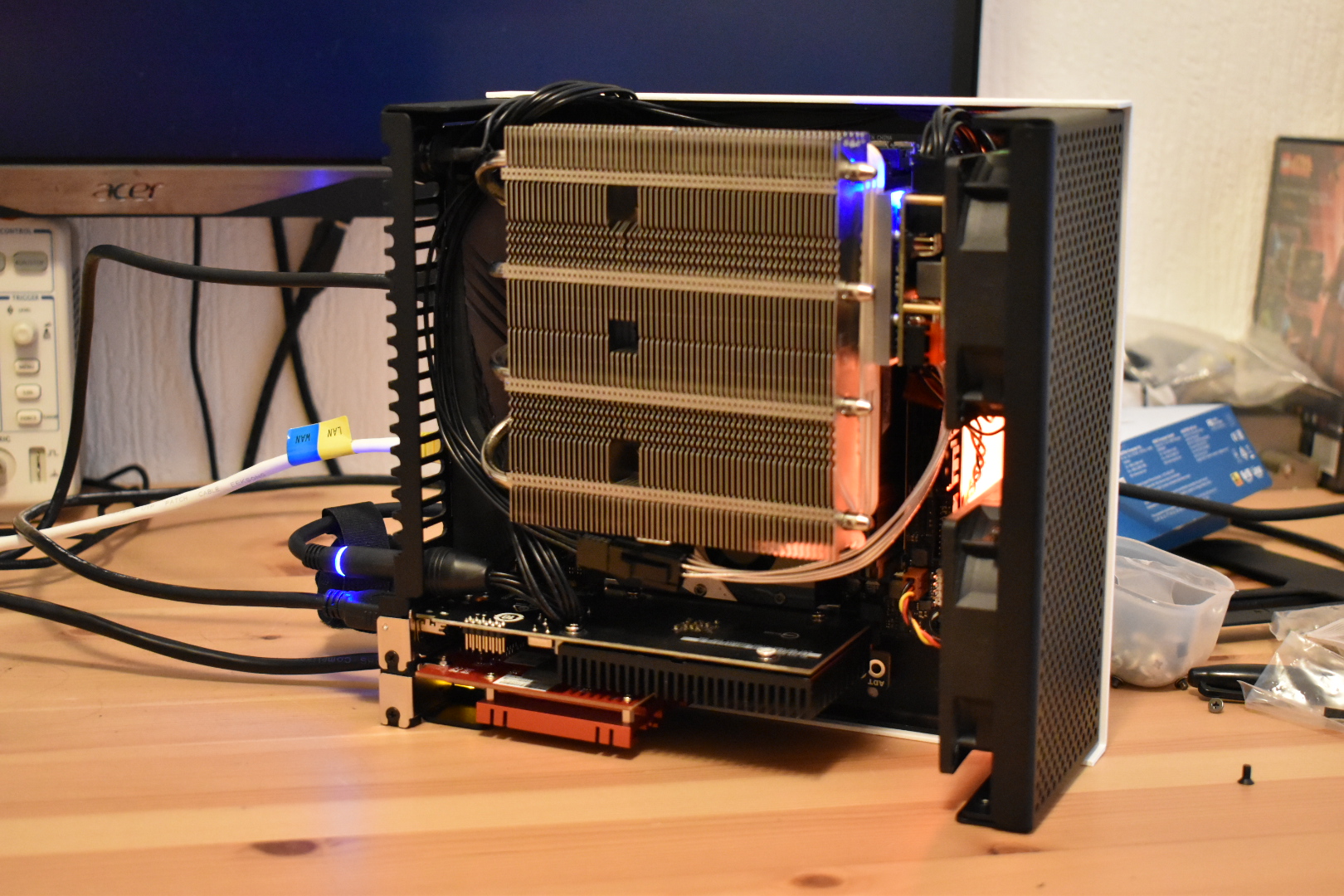

If I am honest from early days the design for the PC I would end up building was focused around the case. Ever since I entered the Enthusiast PC scene at 16 I had lusted over cases by a small Canadian firm called Lone Industries, and their latest rendition of their design, the L5, was very desirable. From early on in the design process I knew I wanted to push the L5 as far as was possible. The L5 is a very non traditional case. Its ITX compatible, but only has room for "low profile" add in cards. It also has no space for a Power supply, instead requiring you to select from a list of pre-approved DC-DC converters, which receive power from an external PSU, like a laptop power brick. Naturally this makes everything more constrained. Getting power into the system is as much of a challenge as getting the heat back out. Despite the constraints, even from the product page it was clear there was room for activities in the L5, especially if traditional SATA storage was not needed.

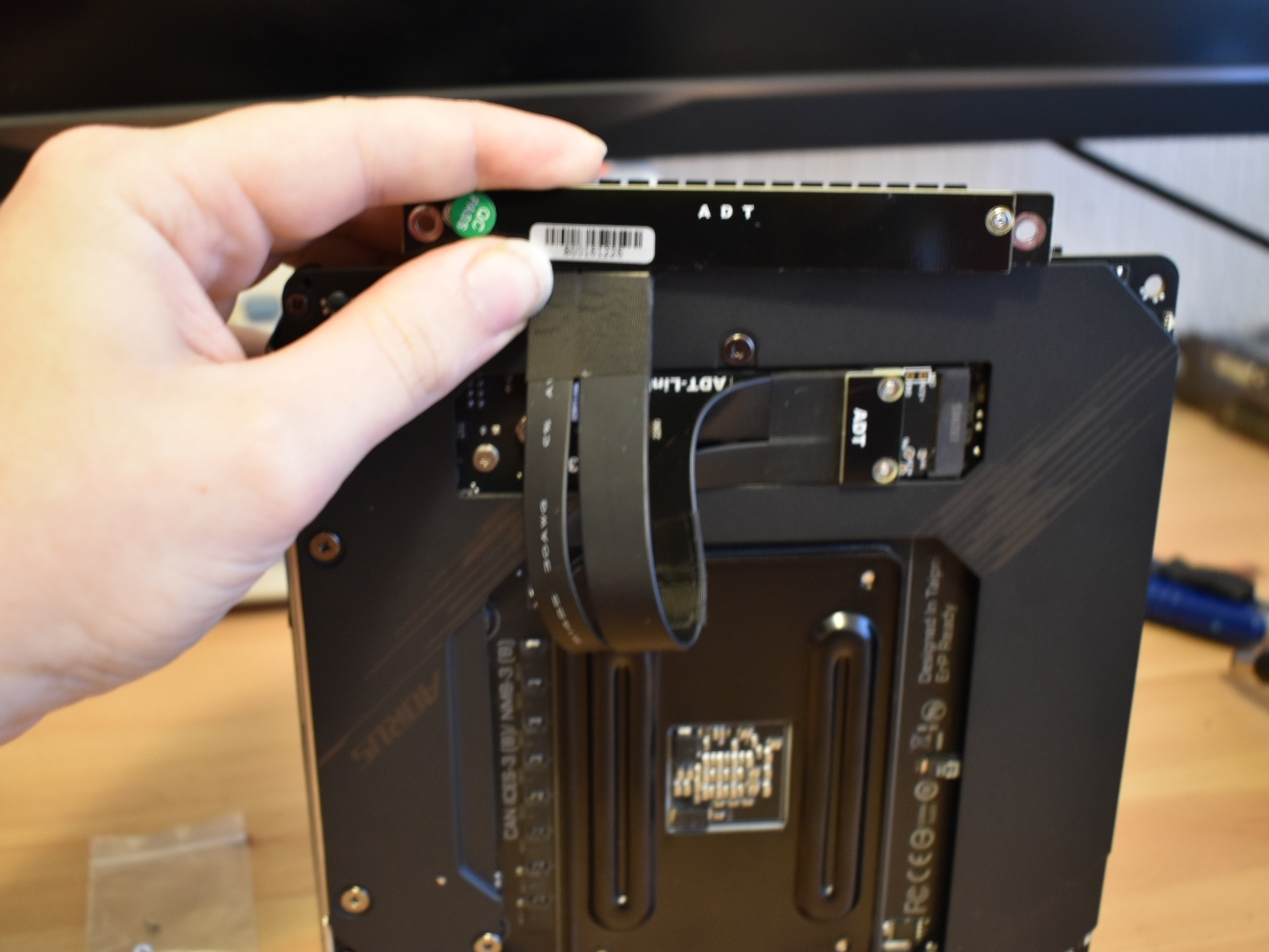

With the challenge of this project in focus it was time to solve the problems and pick some parts. The first problem was getting 10Gbit in an ITX system. The L5 has two card slots, intended for double width GPUs, so there is mechanical space to fit a second add in card, but how do you get PCIe to it? With ever more advanced M.2 storage configurations being the latest craze vendors seem to be trying to force onto gamers who actually don't need it, EVERY modern ITX motherboard has 2 M.2 NVMe slots. These are basically a PCIe x4 connector hiding in a storage format. Many of these boards put the second slot on the back of the board, and luckily China makes adaptors for literally everything. The problem of putting 2 cards in an ITX motherboard can be solved for $15 including shipping, if you're willing to run a janky ribbon cable behind your motherboard to where the card will go.

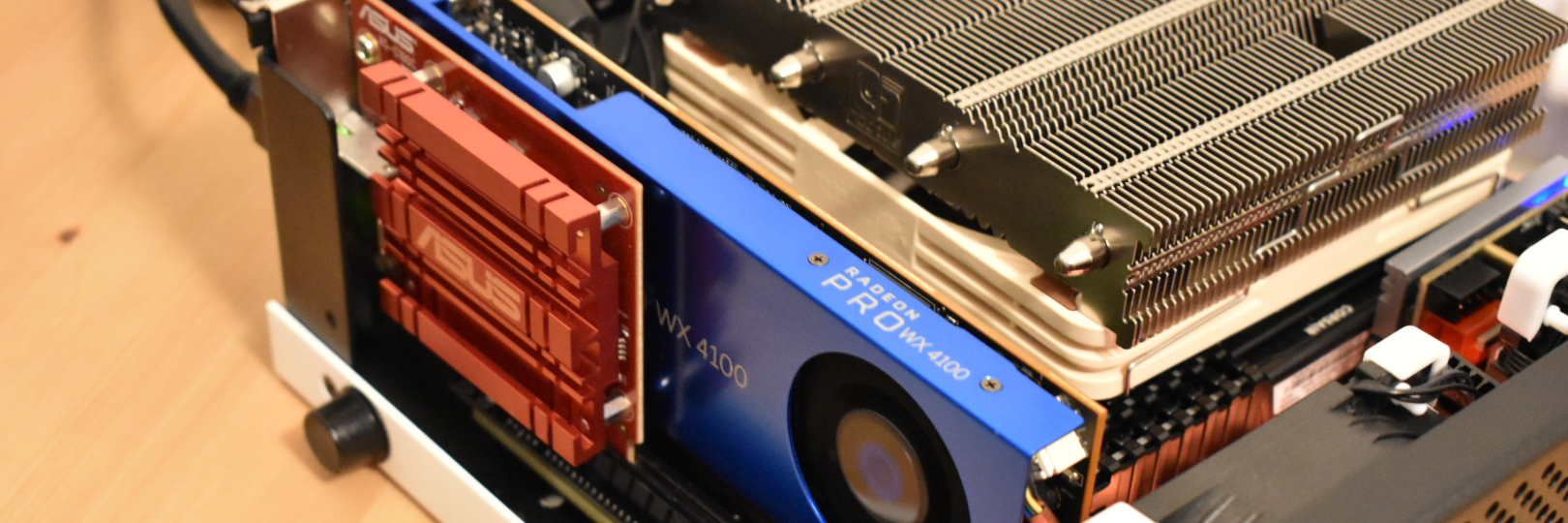

The sacrifice of the second card slot space, severely limited GPU options. Googling "fastest single slot half height GPU" will give you a lot of results on forums of other crazy people trying to build tiny desktops, for various reasons. The consensus among these people is (as of late 2019) the Nvidia P1000 is the fastest, but if you hate Nvidia, the AMD WX4100 is nearly as fast. This was going to be a linux workstation, so the WX was preferable. On top of that it was £50 cheaper. That might not sound like much but paying £300 for a GPU with the performance of a 1050 hurts. £250 for something that is literally a rebadged RX560 isn't much better. On the plus side it is bright blue. (I mean bright blue!)

Knowing this build was going to be truly unique and because of that GPU, ridiculously expensive no matter what I did (x570 pricing also wasn't helping), I decided to max the specs. If this thing was gonna break its budget, it was gonna do it in style. 32GB ram DIMMs had just become available (I have never pre-ordered hardware before) and AMD's new R9 parts were sexy AF. £800 the specs were raised to 64GB of ram and a 12 core CPU.

I gritted my teeth as I ordered the parts. With the variety and obscurity of the parts in this build I had to order from 7 different suppliers. (including Lone directly for the case, and two eBay sellers) The parts for this came from all over the world. The DC-DC converter (something most PC's don't even have) came from Germany; the case came from Canada; the M.2 adapter was shipped from China Directly, and I am pretty sure the PSU "fell off a truck" destined for Dell UK.

Build day, AKA "what have I done?"

It took over a month for all of the parts to arrive. I had to test most of them individually. One faulty power converter was returned before the computer was even built.

Turning the pile of parts into a working machine was a multi-part ordeal. The machine was pieced together as parts came in, carefully testing each part of the design like something I had engineered myself rather than slapped together from off the shelf parts. I had zero faith this would actually work. All the safety margins had been eroded away by pushing the envelope of the design.

The M.2 mod worked flawlessly, and I immediately had a functioning network card, despite having to put two very tight bends in the riser cable.

The Case and the GPU arrived last. Squeezing it all into the case took hours the first time. (a year later I can strip down and rebuild the thing in about 20 mins) But it was worth it, the finished machine in its case was smaller than the box the motherboard shipped in. Powering it on for the first time was different; I have being building computers for years, and the usual anxiety of will it work was nothing compared to this. I knew it worked. I had tested it. But from the moment I powered it on I owned something that I had hacked into existence. It was (and at the time of writing I believe still is) unique!

And oh was it fast!

It was able to crush the compile jobs my old desktop had been struggling with. The machine proved its usefulness at christmas, being taken overseas in my hand luggage without issue. That said every time the TSA see it I do get a puzzled look. I haven't yet had to explain why I am carrying a homebuilt computer around with me.

The other goal, a small low power desktop is also going well, I can leave the machine on 24/7 without it boiling the room I leave it in.

Every computer has a personality, so every computer deserves a good name. At the time I was naming my machines after anime characters, so this one got dubbed "Radical Edward" (or just Edward for short). Edward is, small, energetic, and totally mad! They also always wears white.

"You don't own something 'till you take a soldering iron to it"

By May 2020. I was trapped in a University Dorm room, during the first lockdown in the UK caused by the COVID-19 outbreak. My nearest neighbour was 3 buildings over on the university campus, and I was starting to realise that building an ultra small portable workstation wasn't so useful when there was nowhere to go. I was starting to miss the dual Vega's of my old computer, with my now ample free time for gaming. In particular I missed the second Vega.

My old rig didn't have two GPU's for crossfire, or OpenCL. The second GPU was for passing through to a guest operating system in a VM. I could never get it working right with the Vega, however my 10Gbit ethernet card wasn't any use trapped in a dorm room, so I pulled it and went on eBay GPU hunting. My goal was to find the most powerful GPU that would fit and also supported windows XP. I would use an XP VM to run some old games and kill some time during lockdown. The newest series of cards that supported XP from either chip manufacturer was the ATI Radeon HD 7000 series. I found a £15 HD7470 that was the sweet spot between performance, and "I am broke cos I built this bloody computer 6 months ago". The card I found was an old Dell OEM part, it seemed fitting, and would not be the last Dell part in this story.

Getting the card, the fun began. After installing the GPU I found that it firmly did not work. Frustrated, I pulled the shiny new WX4100, and scratched it on the shitty £15 GPU's screws in the process. After testing with just the HD7470 I confirmed the GPU worked fine, and re-remembered how bad AMD linux drivers where pre AMDGPU. (The 7470 is a Turks-pro core, only supported by frglx. The less said about that driver the better.) Installing the WX4100 in the second slot actually resulted in a no-post. Debugging could have been long and frustrating but I am an electronic engineering student, and the culprit seemed pretty obvious to me.

V = IR

M.2 slots are not intended to drive PCIe cards, and with the addition of the length of ribbon cable, power delivery for the 15W network card must already have been pretty marginal. The adapter actually came with a short cable for providing additional power, however there was never room to plug it in; the GPU was in the way. These GPU's however pull a lot more power, up to 75W. With that much current demand, the voltage droop was just too much for the card to fire up. There was no choice, to continue I needed to plug in the additional power connector, but there was simply no room between the cards.

I considered cutting the shroud of my beautiful WX4100, but no... if I had to butcher a part it should be the cheapest one in the system. I have a saying "you don't own something until you have taken a soldering iron to it". I own my computer; after stripping down the entire system I carefully de-soldered the connector. I now had a 12V hole and a Ground (0V) hole. The power converter has a connector to attach a GPU cable, but I didn't have the cable kit that came with it, and I couldn't get it (see lockdown) But I did have an old TFX power supply lying around I used for experiments.

That had a 4 Pin CPU connector, which fit, the cable colours were even correct! Best of all it was a Dell part; it was perfect! I felt bad snipping the wires of my trusty old PSU but it needed to be done. I soldered this new connection to the adapter, and reassembled the machine, running the cables behind the motherboard and connecting the power converter.

Turning the resultant hacky mess on was the most nerve wracking thing I have ever done. If the connections were inverted, or shorted together, or I had screwed something else up, there were no safety fuses here. The entire computer would literally go up in very expensive smoke. That thankfully didn't happen. And for extra credit the GPU now immediately worked. (the driver still sucked tho)

What I realised after powering it on is I quite possibly own the smallest dual discrete GPU system in the world!

What came next was the really annoying bit I should have tested first. Windows XP cannot boot from UEFI so cannot be supported by OMVF :facepalm: I relented and what came next was a textbook installation of a Windows 7 OVMF guest with GPU pass through on arch linux, followed by an evening of playing the Sims 2. Bored of that for the next 3 months my GPU was used to run Microsoft Word while I did my coursework. (A total waste)

The first time it went wrong

The machine worked great over the summer. It went back overseas. (More funny looks from TSA agents) It came home. It learnt how to make music with me. Then one week after moving back to a University dorm: Bang!

I would love to say it went "bang" with a straight face. But truth is it didn't. It crashed whilst building a Linux Kernel, and after that could never hold up against a sustained CPU load again. What I feared all along had finally happened. My racecar of a computer had finally thrown a part. Sod's law states it has to be one of the exotic parts that is impossible to source during a global pandemic. I feared it was the power converter. And only one week after leaving for uni had to traipse home, broken computer in hand, hunting for debugging spares. I hooked up a regular PSU in place of the converter, and accepted my fate as I fired up the machine to run a stress test.

But no. It shut down nearly immediately. Something else was wrong, but I had stripped it down to the bare board and parts needed to boot. I was sure it couldn't be the GPU, but swapped it anyway. Bad RAM couldn't reboot a system under CPU load. So that left two things. The motherboard or the CPU.

Lets just say f*ck you Gigabyte! I ran a R9 3900X underclocked and undervolted in that board for 10 months. If your VRM can't survive that you shouldn't be building motherboards. Amazingly I have only ever had three PC components fail on me in my life. Two of them are gigabyte motherboards (The third was a Ryzen 1600 with the "SEGFAULT Bug" which was supposed to be impossible on R5's. I guess the CPU didn't like my nickname :shrug: ).

£300 later and the machine has a new Asus x570 board. Which runs quieter, and way more stable, at lower voltages too. I should have bought this a year ago. Thank god it wasn't the power converter. Those were out of stock everywhere.

The middle of the story

This experiment was a big risk, but overall I am happy with the machine. Its not something I would recommend anyone else attempt.

I am writing this in Febuary 2021, under yet another lockdown. I have had two and a half years to get used to this machines quirks, and I am still using it as my daily driver. The thing is crazy high maintenance, needing regular cleaning else it gets very hot. The lack of GPU performance is a real sting in the tail when you do want to do something 3D. That said owning a 12 Core workstation that you can cram in a backpack has real productivity advantages, well until a global lockdown removes the need to anything to be portable.

Now I am just waiting for AMD to announce some small NAVI GPU's; Any day now right... right?

Cya, time to compile some more stuff - SEGFAULT.

Postscript 15-02-2024: Three more years of living with Edward

Edward got me through my university degree. Built countless projects, ran games well. It went on a few more flights, but after October 2021 I moved permanently back to Berlin. Here it's portability was less useful and admittedly it doesn't get moved so often any more. It's been to a few LAN parties, and on an excursion or two to the local hackerspace, but realistically none of this strictly needed the computer to be as small as it is.

At over 4 years old now that sluggish WX4100 is outright anaemic by modern standards. In many cases an IGP can out pace it. So in 2022 I replaced it with a dual slot Nvidia A2000, ending the niche of having a two card ITX system, and somewhat removing the soul of the system.

It is however still very cool, and quite a talking point. It is pretty fun to slap it down at a LAN party, watch everyone else's jaw drop that I am gaming on "that", and still have it destroy everyone else's performance. Most people's computer's are fungible boxes. The only real creative decision being what color to set the RGB. Edward is a statement that computation doesn't have to be boring, and small doesn't have to be slow.

That statement is wearing out though. The computer also isn't ageing great; the DC-DC buzzes and whine's under load. I can't blame it. It's spent its entire life operating at over 110% of its rated load, and now with the new GPU that's more like 140%. The CPU, once a fire breathing monster is starting to look quite tame compared to the latest generation of desktop CPUs and even the latest ARM chips from Apple. Even the A2000 struggles in even moderately demanding games, now that 4K has become my default resolution since I started working from home.

Edward remains a very special machine, but once again my computational needs are changing. - It does still run Factorio great though.

Also that DC-DC converter I was scared I broke in 2020 is still out of stock.